IMPRESSIONS

January 2016 – December 2018

ANR Franco-Swiss Collaborative Project

First impressions matter. The IMPRESSIONS project aims at creating computational models of communicative behavior for virtual Embodied Conversational Agents (ECAs) in order to manage their first impressions of warmth and competence when interacting with their users. Moreover, non-intrusive techniques advancing the state-of-the-art in physiological measurements are being used to detect/infer the user’s physiological state during the first moments of interaction with the ECA. The project is funded by the ANR and it is a collaboration between the CNRS–ISIR at the Uniersity Pierre and Marie Curie in France, and the Swiss Center for Affective Sciences at the University of Geneva in Switzerland.

ARIA-Valuspa

January 2015 – December 2017

EU H2020 Collaborative Project

The ARIA-VALUSPA (Artificial Retrieval of Information Assistants – Virtual Agents with Linguistic Understanding, Social skills, and Personalised Aspects) project will create a ground-breaking new framework that will allow easy creation of Artificial Retrieval of Information Assistants (ARIAs) that are capable of holding multi-modal social interactions in challenging and unexpected situations.

We focus on the collection and analysis of screen-mediated face-to-face multimodal, multisensor recordings of Novice-eXpert Interaction (NoXi Database). These data is then exploited for creating complex interaction models that take into account unexpected events, such as interruptions, that may occur during the interaction with the system or that such ARIA system can proactively trigger for enhancing cooperation, affiliation and engagement with the user.

Attitude Expression in Conversing Agent Groups

September 2014 – December 2015

In collaboration with Brian Ravenet, CADIA and the ENIB in Brest

In the context of the European project REVERIE (REal and Virtual Engagement in Realistic Immersive Environments), we simulated conversing group of agents capable of expressing interpersonal attitudes (e.g. friendliness) through their nonverbal behavior (e.g. smiling and interpersonal distance).

We enhanced our computational model of group social attitudes by using the Impulsion Social AI Engine developed by Claudio Pedica at CADIA for the autonomous generation of spatial behavior in simulated group conversations. User studies conducted online and in immersive environments (i.e. cave) in collaboration with the ENIB, demonstrated that group attitude can be effectively exhibited using our simulated groups of virtual agents.

VERVE

March 2014 – October 2014

In collaboration with healthcare specialists, academics and technology companies

The VERVE project aims to develop new technologies to support the treatment of elderly people who are at risk of social exclusion.

In this context, the common goal is to create serious games and 3D virtual environments that are effective and that will help the target users. At the Multimedia Group the focus is on the autonomous virtual agents that populate these 3D environments and engage users in multimodal interactions. The emphasis is on modeling expression of social attitudes (e.g. friendliness) by designing and modeling the agents’ nonverbal behavior (e.g. facial expressions or territorial behavior in groups).

My effort in this project is twofold: I design and model agents’ nonverbal behavior that supports both expression of social attitudes and territoriality in group dynamics. I design and conduct user evaluation studies to test the effectiveness of these agents.

Icelandic Language and Culture Training in Virtual Reykjavík

March 2013 – March 2014

In collaboration with University of Iceland

The objective of this project at CADIA was to simultaneously advance the state of the art in technology for virtual language training environments and bring the latest methods of language and culture learning to the instruction of Icelandic. The proposed method for learning Icelandic language and culture is to acquire basic communication skills in interactive lessons but immediately put them to use in a 3D game that puts you on a street corner in Reykjavík. The learner becomes an antagonist in a story that can only be completed by speaking with the various people around you in the simulated environment.

My effort in this project consisted of designing, modeling and developing 3D assets (e.g. buildings, streets, sidewalks) and interactive autonomous characters that populate the simulated environment with particular emphasis on the nonverbal greeting behavior exhibited towards the players.

Tinker

August 2012 – December 2012

In collaboration with Northeastern University and Boston Museum of Science, USA

Tinker is a virtual museum guide agent that uses human relationship-building behaviors to engage museum visitors. The agent uses nonverbal conversational behavior, reciprocal self-disclosure and other relational behavior to establish social bonds with visitors. Tinker can provide visitors with information on and directions to a range of exhibits in the museum, as well as discuss the theory and implementation underlying her own creation. This effort is a collaboration between the Relational Agents Group at Northeastern University (Boston, MA, USA) and the ComputerPlace exhibit in the Boston Museum of Science. The exhibit has been operational since September, 2007, and has already been seen by several hundred museum visitors.

My effort was to develop a software module to detect in real time visitors approaching the exhibit by using a Microsoft Kinect. Tinker was able to exhibit different nonverbal greeting cues (e.g. smiling or gazing more at the visitors) as the visitors were getting closer. The goal was to evaluate whether visitors forming friendly (hostile) impressions of the agent during the approach based on the observed nonverbal behavior (a) were more attracted by and encouraged to engage in an interaction, and (b) were spending more time with the agent thereafter.

AlwaysOn: Relational Companion Agents for Older Adults

August 2012 – December 2012

In collaboration with Northeastern University and WPI, USA

Can a virtual agent be installed in our home for months, or years? The primary goal of AlwaysOn was to build companion agents that will be always on, always available, to provide social support and wellness coaching to isolated older adults who live alone in their homes. The agent supports a wide range of social interactions including: companionship dialogue, exercise and wellness promotion, social activity tracking and promotion, and memory improvement tasks.

Sharable Life Stories is a part of the project aiming at activating reminiscence and promoting social communication/cohesion with family and friends. A virtual agent provided a simplified interface to facilitate the recording of life stories, and promoted reminiscence of positive events. During a recording, the agent exhibited appropriate verbal and nonverbal listening behavior (i.e. backchannel). Moreover, the agent securely shared the story with family members and communicated to users feedback and comments made by the family (or friends) about the story.

μ-Transition

May 2012

Angelo Cafaro, Una Ösp Steingrímsdóttir and Úlfur Eldjárn

μ-Transition (Mu-Transition or Micro-Transition) is an interactive retro style microscope that allows to experience transitions from colors to sounds. Viewers are the creators of their own audio-visual experience by choosing from a variety of colored liquids and interactively generating sounds according to changes in their colors, amount of movement, blobs and brightness.

The microscope is made by combining an old slide projector and a vintage 4×5″ range finder camera made by Graflex and dated between 1961 and 1970 (borrowed from the photographer Eric Richard Wolf). The machine has a small, real digital microscope placed in the camera’s lens mount and connected with a computer. The live feed is displayed on a LCD monitor mounted on top of the machine in the eyepiece. The liquids used are made out of food colors, corn syrup, gelatin and Finish rinse aid liquid. Their physical properties (e.g. surface tension and viscosity) were particularly suitable for the visual effects desired.

μ-Transition is the result of a group project for a joint course offered by Reykjavik University (RU) schools of CS and Engineering, and the Icelandic Academy of the Arts (LHÍ) departments of Fine Arts and Music. The installation was exhibited during the Icelandic Festival of Electronic Arts (RAFLOST), in May 2012.

Group members:

Angelo Cafaro (RU, School of Computer Science)

Úlfur Eldjárn (LHÍ, Music Department)

Una Ösp Steingrímsdóttir (LHÍ, Fine Arts Department)

Supervisors:

Joseph Timothy Foley (RU, School of Engineering)

Hannes Högni Vilhjálmsson (RU, School of Computer Science)

Áki Ásgeirsson (LHÍ, Music Department)

Ragnar Helgi Ólafsson (LHÍ, Fine Arts Department)

Kjartan Ólafsson (LHÍ, Music Department)

HASGE

September 2010 – December 2013

In collaboration with CADIA and CCP Games, Iceland

The HASGE (Humanoid Agents and Avatars in Social Game Environments) project was a collaborative work between CADIA and CCP Games aimed at developing new methods to create believable human behavior in animated characters for social game environments such as massive multiplayer on-line role-playing games (MMORPGs). In particular for CCP’s EVE Online.

In collaboration with Raffaele Gaito, I automated the production of naturally looking idle-gaze behavior in humanoid agents, using observational data from targeted video studies and developed a demo in the CADIAPopulus social simulation platform. The simulation focused on people walking alone down a shopping street downtown Reykjavik, in Iceland.

Briscola On-line

November 2010

Angelo Cafaro and Lorenzo Scagnetti

Briscola On-line is a multiplayer video game set in an 3D Italian retro style bar. Players can both explore the environment and play an old Italian card game called Briscola. During the card game, players can also engage in a virtual face-to-face conversation with other players by using a chat. Their spoken lines are automatically augmented with the proper selection of non-verbal behaviors (i.e. hand gestures and gaze direction).

Simulating the Idle Gaze Behavior of Pedestrians in Motion

Septmeber 2008 – March 2009

In collaboration with Raffaele Gaito

In everyday life people engage in social interactions with others by using multiple modalities known as verbal (i.e. language) and nonverbal behavior (i.e. gaze behavior, facial expressions, body language, etc…). Interestingly, gaze behavior also occur in social situations where people are idling alone, that is when they are not engaged in a direct communication with anyone else, but they are still part of a dynamic social environment, such as in a public place.

This project dealt with the generation of idle gaze behavior for autonomous virtual pedestrians in motion. The gaze behavior exhibited by the animated characters is autonomously generated according to the information gathered from previous findings in human social psychology and also targeted observations of real people walking alone down a shopping street in Reykjavik, Iceland. The simulation shown in the video is a demo of my final project for the Master Thesis in Computer Science and it was part of a research project presented at the conference IVA 2009.

Slugs

January 2008 – July 2008

Angelo Cafaro, Dario Scarpa, Giannicola Scarpa

Slugs is a turn based artillery 3D video game inspired by the Worms series. The game features humorous (voices in Italian by Mara Ansalone) cartoon style teams of slugs that are controlled by two human players. As in the original Worms series, the goal is to use various weapons (bazooka and grenades) to kill the opponent’s team.

The game can be played in first person perspective or flying camera view. The 3D world is rendered by the Ogre3D game engine and incorporates physics simulations powered by the NVIDIA PhysX technology and NxOgre bindings. All game objects are subject to forces generated by rocket launches and grenade explosions. Bumping into rocks or other props causes damages to the slugs.

An Eclipse RCP Based Collaborative System

May 2006 – October 2006

Angelo Cafaro

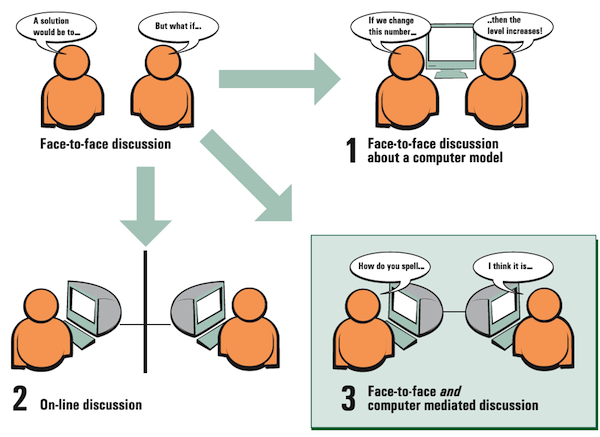

LEAD was an European Commission’s 6th framework programme project focusing on networked-computing support for face-to-face discussions in classrooms between teacher and students. This project was part of the CoFFEE suite of applications to support cooperative learning.

My effort (undergraduate final project) was to design and implement a collaboration tool for threaded discussions in a problem solving session setting. The tool was developed as a plugin for the Eclipse Rich Client Platform technology. The primary goal was to synchronize late clients with discussions already started and offer a viewer to playback those discussions again.